Just thought it would be cool to share this video. Love Blender 2.8. The interface, the way it works... Thanks to Andrew Kramer who introduced me to blender back in 2006. It was still Blender 2.3 (or 2.4, can't remember) back then... How far has it come. As a guy who works on Open Source, I appreciate the amount of effort and time it takes to develop software like this... Keep it up, Blender Foundation. If you use blender, think about making a monthly contribution.

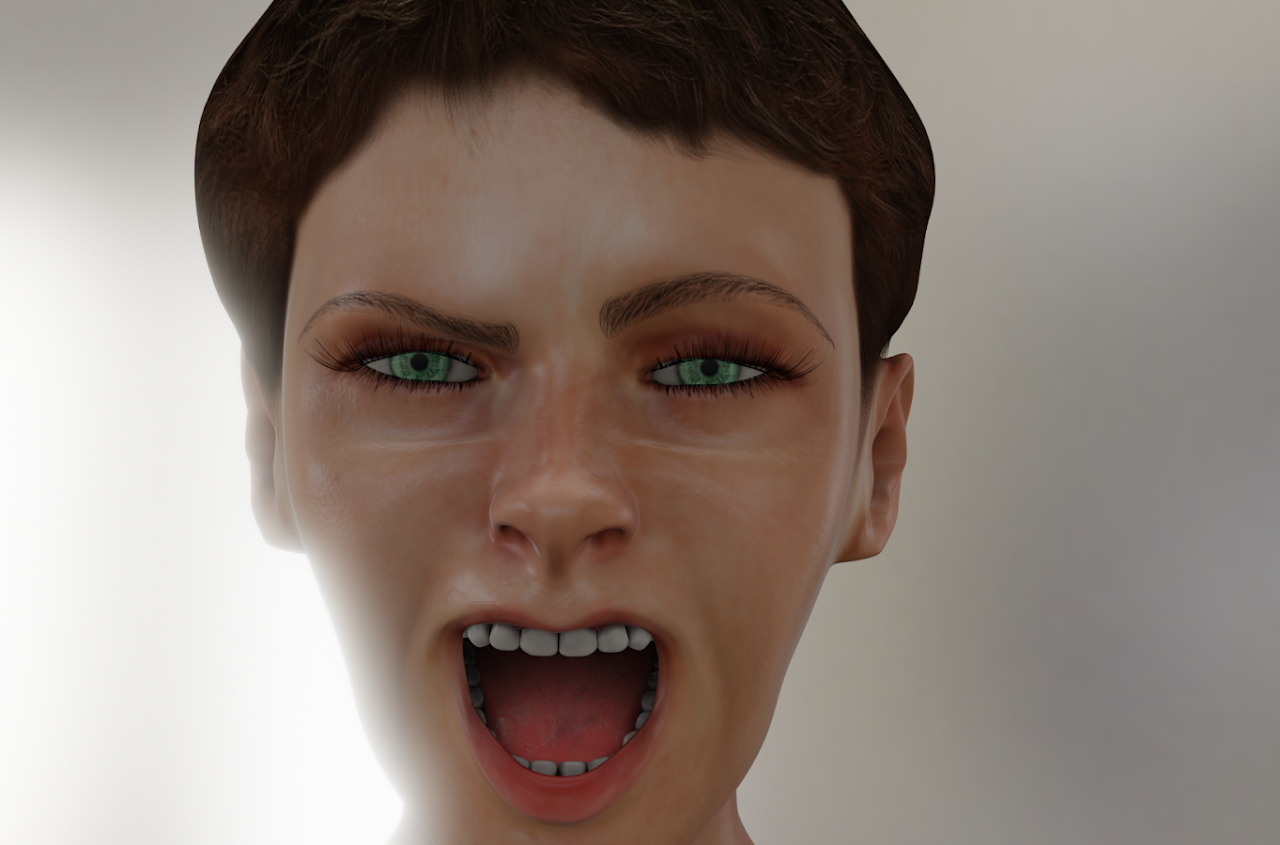

I think there is an improvement, no? But going forward, I'll probably just render my test animations in EEVEE. Cycles is a lot more time consuming. But everything looks better in cycles. I think EEVEE needs different material setup . New blend file is here.

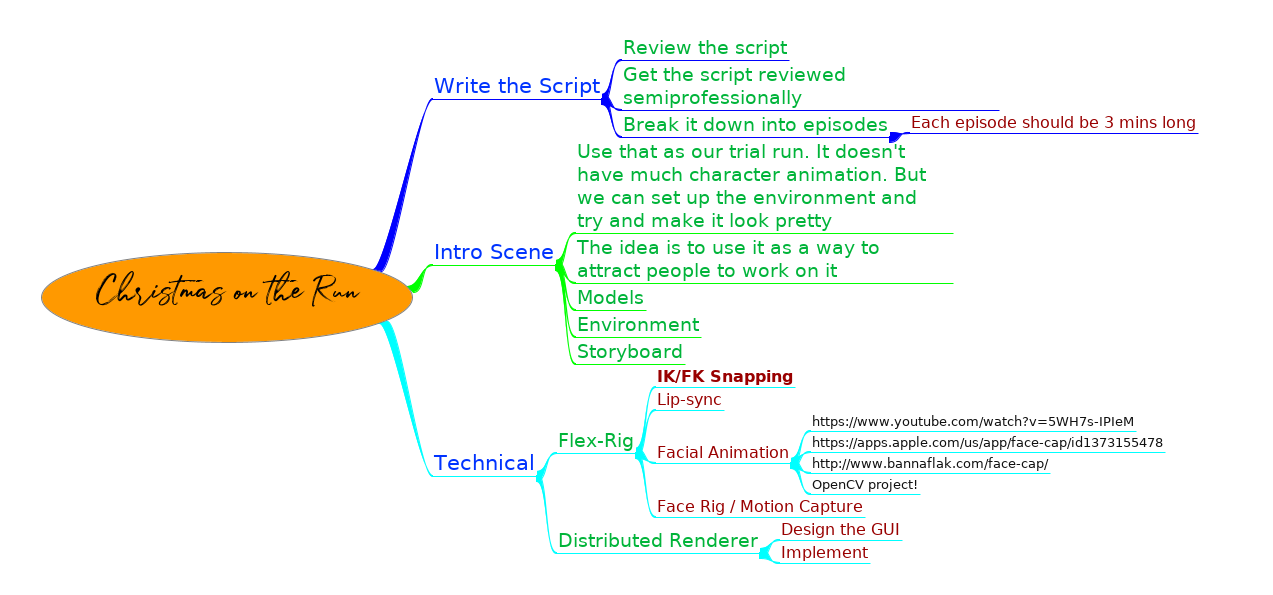

The Research & Development phase of this project is turning out to be more intense than I first anticipated. I'm concentrating on my character animation workflow at the moment. My goal is to get/create a set of tools which enhances the animation process.

For the last while I have been working on a facial animation rig for the ManuelBastioniLab. The original author has decided to stop supporting and developing further features. A group of people decided to pick up the slack, including me.

The characters created by the ManuelBastioniLab are good and the rig is very usable, however, creating facial expressions require animating shape keys, which makes it difficult to animate. I decided to create a face rig to drive the shape keys. As usual, the concept sounds easy, but the implementation is riddled with technical details I have to wrap my mind around. It took a bit of time to complete. However, the first version of the rig is now available on the new official MB-Lab addon, and also a version of the addon I'm maintaining, here.

The next project I'm working on is to create a lip-syncing feature in the Lab to be able to create lip-syncing animation. I've been looking at different Automatic Speech Recognition (ASR) open source software to work with. The idea is to run a speech clip through the ASR engine, which produces timing information of when phonemes are spoken, I take this data into blender and create a first path lip-sync animation. There is Rhubarb and an equivalent add-on for blender that do that, but it's only for Windows and Mac, I'm looking at either porting it to linux, or developing my own. Being who I am, I'm leaning towards developing my own, just to learn how things work

well.. I finally have a program that takes a speech clip and breaks it down into its phonemes, with a start time and duration for each phoneme. The JSON file looks like this:

}, {

"word": "letter",

"start": 945,

"duration": 36,

"phonemes": [{

"phoneme": "L",

"start": 945,

"duration": 6

}, {

"phoneme": "EH",

"start": 951,

"duration": 7

}, {

"phoneme": "T",

"start": 958,

"duration": 8

}, {

"phoneme": "ER",

"start": 966,

"duration": 16

}]

}]

The next step is to take this information and convert it into animation data for a ManuelBastioniLab character. The code is here. Still needs work to make it compile and build easily. (Linux only... sorry )

Have to admit, I'm a bit rusty with animation. Last I did some Blender animation was on "Your Song". Anyway decided to get my hands a bit dirty. I did a quick walk cycle. It's still not perfect, needs some smoothing and overlapping action. This is almost pose-to-pose at the moment. I'm going to improve on it over the next couple of days. Then I'll do a couple of other walk cycles. I'll be using Kevin Parry for reference. I think he's awesome. Here is his walk cycle reference video. The next step is to do a short 30 second scene to work out the kinks from my work flow.

I rendered the scene twice, once in cycles and the other in eevee. I still think cycles is superior. But I'm guessing EEVEE will need it's lighting tweaked to work around its limitations. I uploaded the blender files here. You might need to re-map them because one file links to the other. Also the textures might need to be remapped.

I still think cycles does a clearly better job rendering in low light. It mimics the shadows properly. I admit the materials are still not quiet there. But even without great materials cycles does a pretty good job at rendering... I'm starting to get the feeling that I might want to render the final movie in cycles. I can always have two versions. The rough copy can be in EEVEE.

I updated the camera_dolly_crane_rigs.py to 2.8.

This addon creates some nice controls for the camera to make it easy to operate.

Some technical mambo jambo to follow.

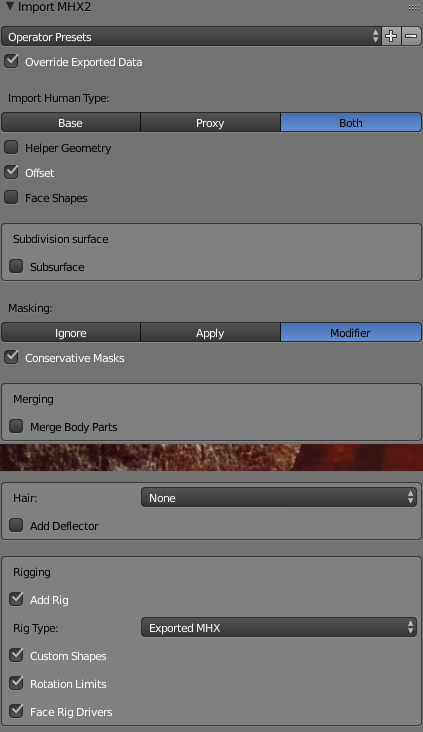

If you need to import makehuman using MHX2, you'll need to follow the instructions here.

The scripts provided works fine for 2.79, but the API has changed in 2.80, so you'll get a python error when importing. I fixed the script. I wanted to push a patch into their repository, but don't know how. Until I figure it out, here is the diff. You can apply it on your version of the MHX2 script under 'scripts/addons/import_runtime_mhx2/utils.py' to get it working:

diff utils.py ../../../../2.79/scripts/addons/import_runtime_mhx2/utils.py

109c109

< ob.select_set(value)

---

> ob.select_set(action=('SELECT' if value else 'DESELECT'))

136,137c136,137

< ob1.select_set(False)

< ob.select_set(False)

---

> ob1.select_set(action='DESELECT')

> ob.select_set(action='SELECT')

I'm starting to realize the limitation of the Makehuman rig.

I've been watching a lot of animated films lately. First to decide on the style I want and second to set my expectations. This is going to be a long haul project. Hopefully, the quality will improve over time, especially if other artists participate. But as it stands at the moment, I'll be generating my characters using Makehuman, Mixamo or ManuelBastioniLAB. Initially, I wanted to use cartoon characters similar to Disney films like Tangled or Inside Out. However, the only character generator for this is CG-Cookie's Flex Rig. I like the characters it produces, but the clothing is not great. I can go down the road of modeling clothes for the characters, but as I continued writing the story, the cartoon character look didn't seem to fit in how the story is turning out.

It appears I'm setting on a mix between cartoon and realistic looking characters. These can be produced with the character generation packages I mentioned previously, and still fit within the story. I'll probably need to rig them myself using Blender's Riggify.

My hope then is to have quality somewhere in the ballpark as video games:

These are still pretty high quality, so we'll see how it goes. Animation plays a big part on how real something looks. Some of the things I'll defer for later in the project are things like clothing/hair simulation. I'll start by using mesh clothing, then as I get better and faster I'll add clothing and hair simulation.

Of course all this is up for change. I'm still at the very beginning.

Rigging is a pain in the butt. I've been looking at the easiest way to go about this. It looks like using a Makehuman model and importing it using mhx2 script, documentation here, with the settings shown in the attached image gives me what (I think) I want.

The MHX body rig is actually pretty good, as far as I can tell. Beside there is a facial rig with pretty decent weighting, which should allow me to make pretty good facial expression. I'm gonna have to test it some more. I'll be adding some screen shots. I'll also upload the blender file as soon as I have the rig working.

It'll need some modifications. I'll have to create a better way to control the face. It's finicky at the moment.

The next challenge is going to be clothing. Makehuman clothing is not great, so I'll need to see what I can come up with.

I also need to test the Manuel Bastioni lab, issue with that is the developer has stopped the project. So it's not going to be improved on.

UPDATE:

Found this addon which allows using rigify with Manuel Bastioni lab. I'm still looking if there is a solution for having a face rig with Manuel Bastioni

There are a few resources to keep track of. I'm not a modeller and I don't want to spend time modeling characters, when I can use a good character generator. At first I was thinking of using CGCookie Flex rig, but then I want to get a slightly more realistic model. There are three tools to consider

- Makehuman

- Manuel Bastioni Lab

- Fuse 3D - This is now Adobe product, but version 1.3, prior to adobe buying it is available for download for free from https://store.steampowered.com/. You can also download a bunch of characters from www.mixamo.com

I quiet like Fuse 3D, the models are not very high poly, but they still look good. Hopefully, I'll have some animation tests happening soon. I'll have to rig them first though

I spent the last couple of days frustrated with the flex rig file. I asked a question on blender exchange, but no one answered: https://blender.stackexchange.com/questions/167308/cg-cookie-flex-rig-linking-problems

There were 4 problems

- Invalid drivers and cyclic dependencies

- I was thinking these were the reason for the other issues I list below. Turn out not, but I cleaned them up. I had to write some python scripts to cipher through all the drivers and find the invalid ones. At one point I had to open the blend file in a hex editor and look for a driver that was bad but I couldn't see it through the interface.

- The rig wasn't visible after I make proxy. It was hidden behind the linked character.

- I describe how I solved this problem in this video

- The bone shapes had modifiers on them, which disappear when I execute the rig_ui script (or under other mysterious circumstances)

- I just had to apply the modifiers so they don't disappear

- The IK leg was bending backwards. So the knee would bend backwards instead of forwards. Reminded me of the aliens in that Charlie Sheen movie (Arrival)

- I describe how I solved this issue in the same video above.

Have to admit this behaviour is kinda weird all around. Not sure if it's because the file was originally 2.79 or what. When I use rigify with MB-Lab things were a lot smoother. Anyway, moving on.

FYI, the file can be downloaded from here

I'm back at the technical work again. I think I settled on using the CG Cookie Flex Rig. The character is quiet descent and the rig is very nice. It needed IK/FK snapping for the arms and legs. I did some code splicing between the MB-Lab character with Rigify and pulled out the needed bits and pieces to implement IK/FK snapping on the CG Cookie Rig. That seemed to work pretty well. You can download the updated blend file here.

Here is my plan for the next while